For months, I’ve been following the story that the Mozilla project was set to add closed source Digital Rights Management technology to its free/open browser Firefox, and today they’ve made the announcement, which I’ve covered in depth for The Guardian. Mozilla made the decision out of fear that the organization would haemorrhage users and become irrelevant if it couldn’t support Netflix, Hulu, BBC iPlayer, Amazon Video, and other services that only work in browsers that treat their users as untrustable adversaries.

They’ve gone to great — even unprecedented — lengths to minimize the ways in which this DRM can attack Firefox users. But I think there’s more that they can, and should, do. I also am skeptical of their claim that it was DRM or irrelevance, though I think they were sincere in making it. I think they hate that it’s come to this and that no one there is happy about it.

I could not be more heartsick at this turn of events.

We need to turn the tide on DRM, because there is no place in post-Snowden, post-Heartbleed world for technology that tries to hide things from its owners. DRM has special protection under the law that makes it a crime to tell people if there are flaws in their DRM-locked systems — so every DRM system is potentially a reservoir of long-lived vulnerabilities that can be exploited by identity thieves, spies, and voyeurs.

It’s clear that Mozilla isn’t happy about this turn of events, and in our conversations, people there characterised it as something they’d been driven to by the entertainment companies and the complicity of the commercial browser vendors, who have enthusiastically sold out their users’ integrity and security.

Mitchell Baker, the executive chairwoman of the Mozilla Foundation and Mozilla Corporation, told me that “this is not a happy day for the web” and “it’s not in line with the values that we’re trying to build. This does not match our value set.”

But both she and Gal were adamant that they felt that they had no choice but to add DRM if they were going to continue Mozilla’s overall mission of keeping the web free and open.

I am sceptical about this claim. I don’t doubt that it’s sincerely made, but I found the case for it weak. When I pressed Gal for evidence that without Netflix Firefox users would switch away, he cited the huge volume of internet traffic generated by Netflix streams.

There’s no question that Netflix video and other video streams account for an appreciable slice of the internet’s overall traffic. But video streams are also the bulkiest files to transfer. That video streams use a lot of bytes isn’t a surprise.

When a charitable nonprofit like Mozilla makes a shift as substantial as this one – installing closed-source software designed to treat computer users as untrusted adversaries – you’d expect there to be a data-driven research story behind it, meticulously documenting the proposition that without DRM irrelevance is inevitable. The large number of bytes being shifted by Netflix is a poor proxy for that detailed picture.

There are other ways in which Mozilla’s DRM is better for user freedom than its commercial competitors’. While the commercial browsers’ DRM assigns unique identifiers to users that can be used to spy on viewing habits across multiple video providers and sessions, the Mozilla DRM uses different identifiers for different services.

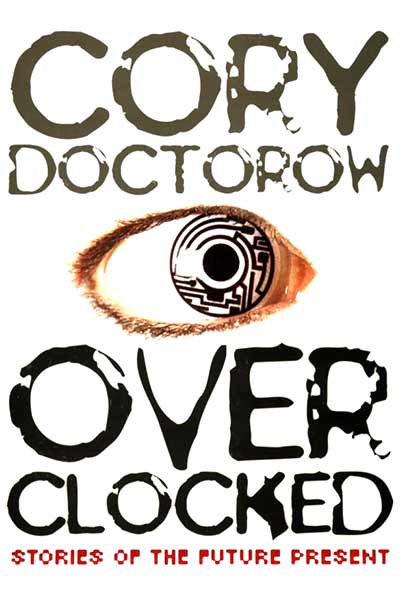

Firefox’s adoption of closed-source DRM breaks my heart